AI Slop Infiltrated American Newspaper Articles. 9% of them, to be exact.

A recent analysis of 1500 American newspapers finds the overall rate of fully AI-written articles a shocking 5%, and “mixed” another 4%, for a total of 9%. The rate was higher for articles about technology - no surprise! (16%) and health - no good! (12%).

ON JANUARY 10, 2024, at a US Senate hearing titled "AI and The Future Of Journalism," Senator Blumenthal sounded the alarm.

“First, Meta, Google and OpenAI are using the hard work of newspapers and authors to train their AI models without compensation or credit… those models are then used to compete with newspapers and broadcasters,” he said.

He was right. Today, journalism is experiencing an existential crisis due to a singular threat: AI.

We used to do a google search for a topic and then click on the news article that comes up. Not anymore. The AI summary at the top of the page is often enough, and people don’t do the extra “click” to get to the news story. This is a media company’s nightmare - “zero click search.”

As a result, internet traffic to top US newspapers is down 45% in the last four years. MSN, CNN, Fox News and others are seeing 30-40% drops in traffic.

And when traffic drops, so does revenue. Over 100 US newspapers closed since last year, leading to local news deserts. The number of newspaper journalists shrunk from over 360,000 in 2005 to about 90,000 today. Reporter and journalist jobs are expected to decline for years to come.

And it’s not just the external threat from big tech. AI-created contents is already infiltrating and undermining the media from within.

The other day I asked ChatGPT, “How much material in the news media is written by AI?”

I was prompted by personal experience working with a PR agent at a top global agency, Sam (not their real name) on placing an Op Ed. “Your piece is great, but I revised it to make it clearer and shorter,” Sam wrote. Strange, I thought. The piece was completely rewritten. Not a single sentence remained intact. Then it hit me. “Wow, incredible! Makes me wonder if you used ChatGPT? I mean it as a compliment,” I texted Sam, worried that if I guessed wrong, they would be offended.

“Haha…We use something similar and more powerful,” Sam responded. “Our proprietary AI tool that we use to help us with editing and writing combines ChatGPT and other platforms. We wouldn’t just say, “rewrite this article.” You won’t get a very good output. To get a quality output requires smart, extensive direction, including drafting a version yourself, or at least an extensive outline with an explicit prompts on what you want the output to look like, then using your skill to continue editing and refining, re-running it through AI, and then refining some more, and so on until you get what you need… Almost all professional writers — both in media and PR — are using it to some extent.” (my highlight)

This is not a secret. The CEO of Omnicom, a giant media conglomerate, said recently that the company is using AI to “accelerate creative ideation.” “OmniAI, the agency’s in-house AI platform, provides teams with generative AI models for text, graphics, video and audio, trained for agency-specific use cases. The company is aiming to have every client-facing employee using OmniAI by the end of the year,” according to a recent report.

Sam and I agreed not to use AI with my work. All my writing here on ETPNews.com or elsewhere is 100% human.

But why is AI-written journalism a problem, anyway? The main reason, in my opinion, gets to the concept of “slop.”

“Slop” is an interesting word. Its original meaning was “human food scaps fed to animals,” or just “mud.” The term is still used in horse races to refer to muddy tracks after it rains, as famously portrayed by Kramer in Seinfeld: “"Oh this baby loves the slop, loves it, eats it up. Eats the slop. Born to slop.”

But “slop” also means “a product of no value,” as in, “watching the usual slop on TV.” The word “sloppy” comes from the same root.

In mid-2024, the term acquired a new use: “digital content of low quality that is produced usually in quantity by means of artificial intelligence.”

Max Spero, the CEO of a Brooklyn-based company called Pangram, is a world’s leading expert in AI slop. Pangram is a world leader in detecting it - a hugely important task. More on this below.

In a recent podcast, Spero gives a great example of “slop” in restaurant reviews.

“I recently had the pleasure of visiting so-and-so restaurant nestled in the heart of Brooklyn. From the moment I walked in, I could tell that the ambiance was beautiful…"

“It uses fluffy adjectives,” Spero explains, “and overuses cliches, without cutting to the core of the experience. Because AI did not actually have this experience.”

This is exactly the problem with AI slop. AI did not actually go to the restaurant. Yet, it can instantly write a review of any length you want - 1 line, 100, 1000 of pure slop.

But there is an even bigger problem: the subtle changes in meaning. If you write down your detailed impressions of the restaurant, and ask AI to re-write your piece, the result will be beautiful and eloquent. It can write it in the style of Shakespeare or Dr Seuss, make it shorter or longer. But the smells, the tastes and the feelings of the restaurant will be distorted. It was not there.

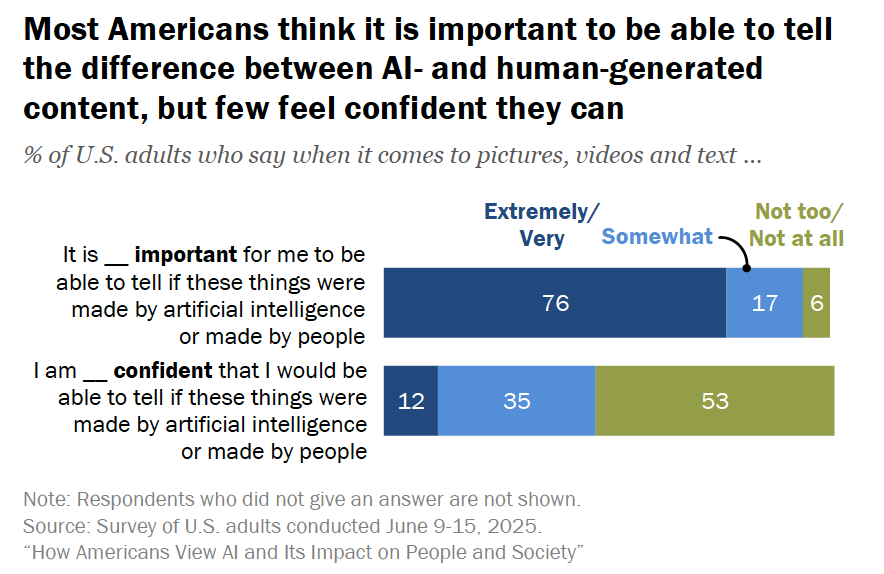

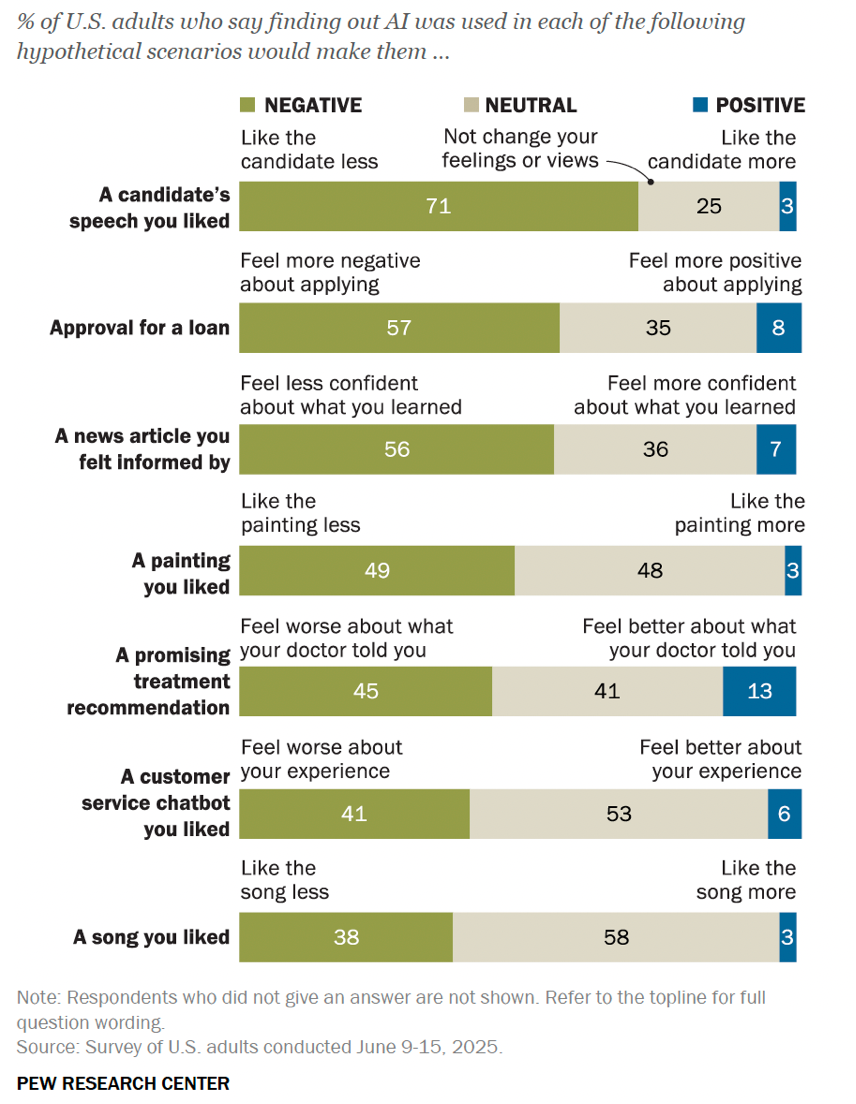

This limitation of AI is understood by the majority of Americans, and they don’t like it. A recent Pew Charitable Trust survey showed that 94% of Americans want to know whether an article was AI-written; 53% (correctly) feel they couldn’t tell whether it was; and 56% would feel less confident in the article if it was.

What if I were to take a few newspaper articles and paste them into some kind of an AI detector, I thought. Hence my question to ChatGPT to see if anyone had already done this.

It turns out, a few weeks ago, someone has. On October 21, a paper quietly appeared on the prestigious arXiv preprint server, titled “AI use in American newspapers is widespread, uneven, and rarely disclosed.”

Spero is a key author - it was Pangram’s AI detection that was used in the paper. (Spero and other authors have not responded to our podcast invitation - yet).

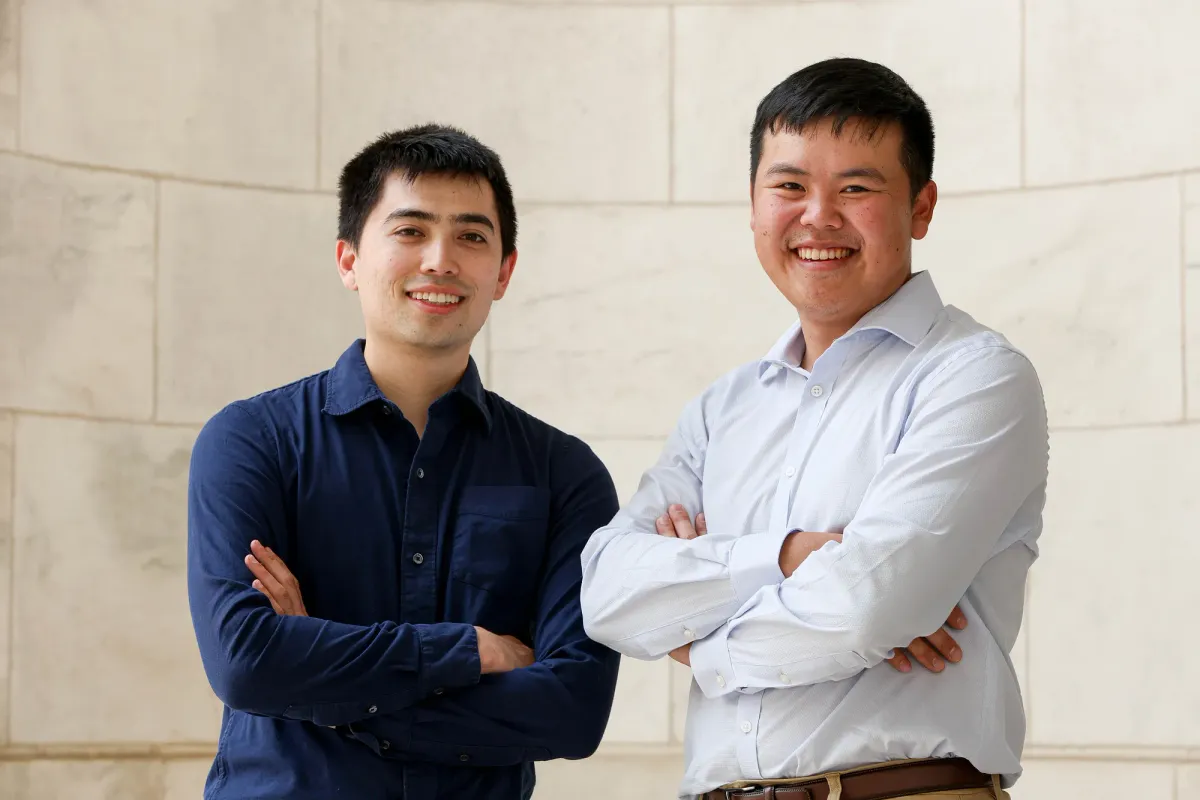

In 2023, when GPT4 took the world by storm, Spero realized that AI slop is coming, and there will be a need to detect it. He and his friend from Stanford undergrad, Bradley Emi, quit their jobs at leading AI companies to start Pangram with a vision: to become the best at detecting AI text.

It seems they’ve succeeded. They can detect > 99% of AI-generated text, even from GPT5, where other tools struggle. And their false-positive rate for news articles is ∼ 0.001% - “orders of magnitude better than other tools,” says Spero.

They can also distinguish “fully AI-written” vs “Mixed” articles. “Mixed” could be a human wrote the article, and AI corrected it, perhaps.

This is no small feat. People use all kinds of ways to avoid detection. You can say, for example, “write this essay at the 8th grade level.”

There are even “AI humanizers,” special tools that make AI text look more human. Pagram is able to detect them as well, so far - setting off an AI detection arms race.

The October paper is the work primarily of Jenna Russell, a PhD student in computer science at the University of Maryland, Mohit Iyyer, Jenna’s mentor, and other colleagues from the University, Spero, Ami and others at Pangram.

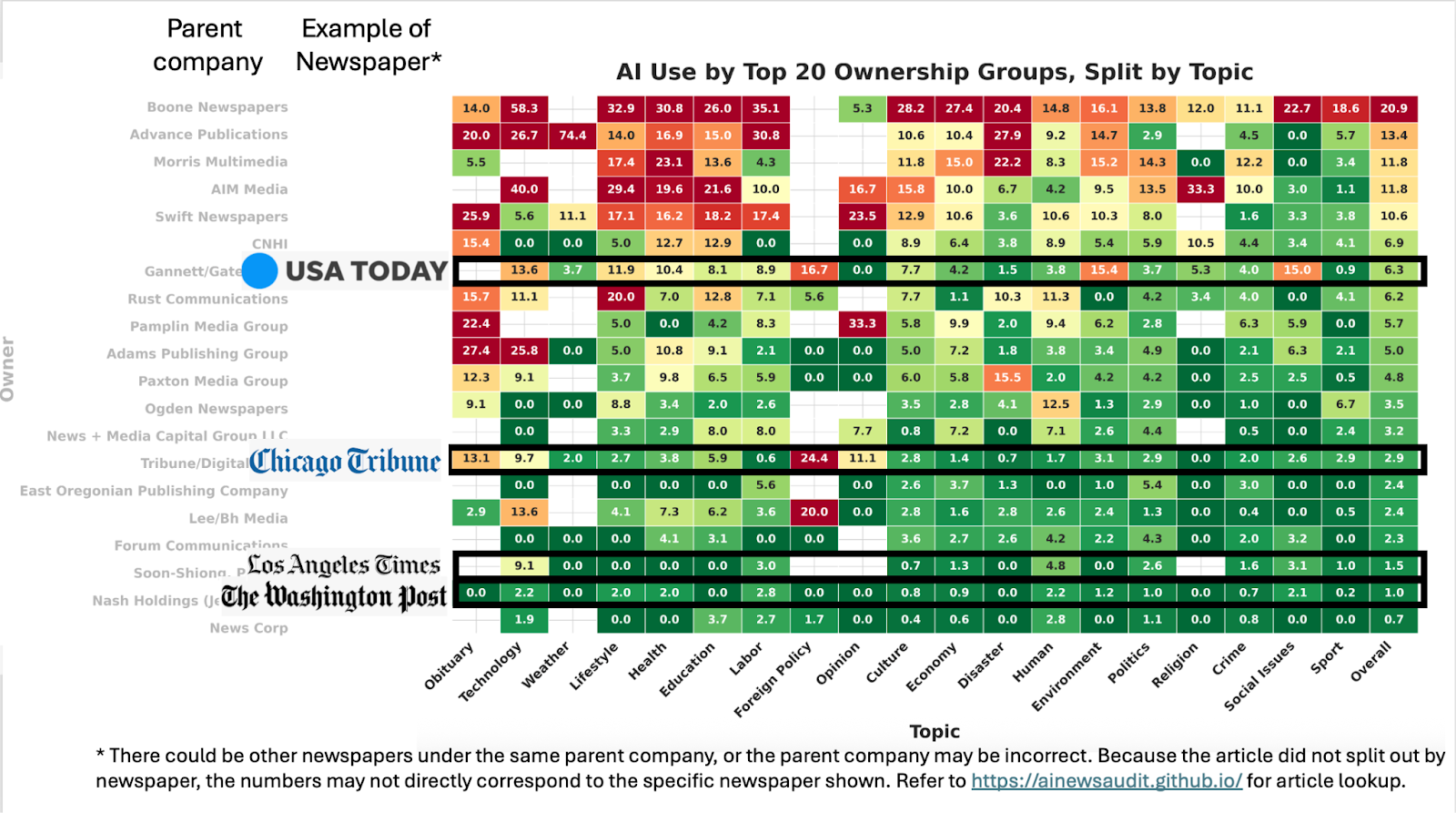

Analyzing 185,000 newspaper articles from this year, across 1500 American newspapers, they find the overall rate of fully AI-written articles a shocking 5%, and “mixed” another 4%, for a total of 9%. The rate was higher for articles about technology - no surprise! (16%) and health - no good! (12%).

The rate is lower in the top newspapers - New York Times, Washington Post and Wall Street Journal, where it is 0.7% (still substantial, in my view). Here is the full picture by topic.

Spero and colleagues created a portal where you can look up by article, newspaper, or reporter

There I found a couple of examples of fully AI-written articles: here is one from the NYT from July 2025:

And here is one from WaPo:

Not surprisingly, Opinion pieces are over 6-times more likely to be AI-written than work by professional journalists. Here are some notable Opinion pieces identified in the article that were fully AI written:

Ultimately, in addition to the proliferation of AI slop, the authors of the article point out two other very concerning things:

First, the majority of newspapers have no AI policies. Out of 100 newspapers the article examined, only two - New York Post and Michigan Daily - prohibit AI. Seven others allow but require disclosure. The other 91 have no policy whatsoever.

And second, there is no disclosure. Out of 100 AI-written articles Pangram detected, most were not marked as such. Even newspapers that require disclosure did not always do it.

The authors conclude: “Overall, our audit highlights the immediate need for greater transparency and updated editorial standards regarding the use of AI in journalism to maintain public trust.”

And, as a side note - the article came out about a month ago. There has been zero press coverage so far.