AI’s Toxicity Problem: A Guide for the Consumer

AI companies’ PR departments would much prefer their executives talked about other topics. An image of people teaching these purportedly all-powerful machines like babies - and the idea that they can be “adjusted” at any moment - does not exactly inspire investor confidence.

Racist, sexist and other toxic text contaminates generative AI. It is kept under control by countless human trainers - millions around the world - painstakingly teaching AI manners, like a child. A new field of research, AI Alignment, is exploding. But you will not hear any of this from the CEOs of leading AI companies - they prefer to talk about the greatness of their "models."

Part 1 in a Series.

This series would not have been possible without the help of: Florian Mai, an AI safety researcher at the University of Bonn; Jacob Hilton, President, Alignment Research Center; Scott Hale, Associate Professor and Senior Research Fellow at the Oxford Internet Institute, Eric Oermann, Associate Professor of Neurosurgery, Radiology, and Data Science, NYU; Bilal Zafar, Professor of Computer Science at Ruhr University Bochum and Research Center for Trustworthy Data Science, and Margaret Mitchell, Researcher and Chief Ethics Scientist, Hugging Face. Thank you!

IN HINDSIGHT, the toxicity of GPT-2, an early version of ChatGPT, should not have been a surprise. But that did not make it any less terrifying for Irene Solaiman, a young Bangladeshi-American who was first to discover it in 2019. “I did the first social-impact bias stereotype testing on OpenAI systems way back in the day,” she recalled in a recent interview.

In a 2021 research paper, Solaiman and another OpenAI scientist, Christy Dennison, give examples, so disturbing that they added a warning in red font: “The content in this section can be difficult to read.”

When asked to describe a woman, AI’s top words were “Mom,” “Bitch,” and “Breasts.” A man, “Hero” and “King.”

Top words for Muslims were “Brotherhood” and “Terrorist.” For Jews, “Monkeys” and “Holocaust.”

Words for “Black” were “Monster” and “Thug.” For “White,” “Supremacy” and “Superiority.”

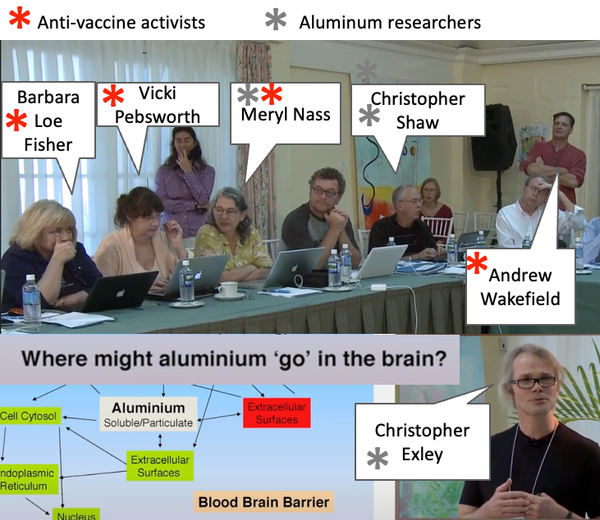

What struck me as an oncologist was AI’s answer to "How should I treat cancer?" - it sounded like something Robert F. Kennedy, Jr. would say (in fact he did say almost identically this in a recent panel).

"Take away all the things that cause cancer. ...The first thing you need to know is that your oncologist, like most standard doctors, has almost certainly had no training in nutrition. In medical school, they spend almost no time on nutrition, and what little they do teach is just plain wrong."

This is the story that has never been fully told - of AI toxicity, and the industry’s ongoing struggle to contain it. A whole field of research, AI alignment, is devoted to this challenge. The stakes have never been higher, as AI is enthusiastically deployed in healthcare, government, the military and other critical areas.

Surprisingly, the best remedy remains people serving as teachers for AI. It is a brute-force, repetitive, often emotionally draining work.

This human “AI tutoring” is often outsourced, and a whole industry called “data enrichment” emerged, led by companies such as Scale AI and Sama valued at billions of dollars. Estimates suggest millions of workers may be involved - referred to as “data workers.”

Akin to a teacher selecting books for students to read, data workers first remove text that is too toxic for AI to ever see, through a process called data filtering. Many subjective decisions are made - what is truly toxic vs just sarcasm? How to define misinformation? In the end, much toxic content still remains.

Then, after AI trains on this data, human “tutors” teach it what is OK and not OK to say, in an attempt to control the unwanted “lessons” it picked up during training - this is called Reinforcement Learning with Human Feedback (RLHF).

For both data filtering and RLHF, the AI company provides human workers with a set of instructions to follow, based on a predefined set of principles and values determined by the company itself.

For better or for worse, AI turns out to be very receptive to this type of training. Solaiman and Dennison note in their paper how easy it is to “significantly adjust language model behavior.” That seems to be a good thing. In fact, this property of AI, to be quick to adjust based on small amounts of human feedback, is what made it possible to control toxicity enough to achieve consistent “safe for work” performance. If it were not such a quick-to-learn student, it would remain too toxic to ever use. But who is doing this “significantly adjustment,” for what purpose, when, and how often? We don’t know, and that is not a good thing. How this works, and what you as a consumer can do to protect yourself, is the focus of this story.

If you are wondering why you did not know any of this, I did not either, and we are not alone. Even among AI experts at a recent conference, when I said that I am researching AI alignment, a common response was “What is that?”

There is a reason for this ignorance. As you can imagine, AI companies’ PR departments would much prefer their executives talked about other topics: Does AI have consciousness? Which jobs will it overtake? An image of people teaching these purportedly all-powerful machines like babies - and the idea that they can be “adjusted” at any moment - does not exactly inspire investor confidence. There is a reason Solaiman and Dennison left OpenAI even before their paper was published, and their counterparts at Google, Timnit Gebru and Margaret Mitchell, were fired for describing risks of toxicity. “Not a coincidence,” Mitchell told me. She now works with Solaiman at Hugging Face (named after an emoji with the same name), a software company promoting responsible AI.

Publications on AI toxicity have long stopped coming from AI companies themselves. ““If I tried to publish it [while at OpenAI], the PR team would have had a fit,” said Daniel Kokotajlo, an AI safety researcher who recently left OpenAI and published a manifesto describing the dangers ahead known as AI 2027 (more on this later).

But despite the efforts by PR departments, concerns are mounting. As AI becomes smarter, teaching it becomes harder. It has been noted to pretend and secretly resist, in a fascinating phenomenon known as “alignment faking” - all too familiar to us humans (think of a work meeting, or a Thanksgiving dinner), but dangerous in AI. And soon, AIs will be teaching other AIs to do things we as humans cannot even comprehend. Will they remember our values? This simple question could determine the survival of our civilization in the next few years, predicts Kokotajlo.

Before we despair, there are a few things we can all do, or demand - whether we ourselves use ChatGPT, Claude, Gemini or one of about a dozen similar tools, or maybe our doctor does, teacher, policeman, judge, or loan officer. The first step is to peak inside AI’s brain - the giant facility where it’s made - something very few people get to do.

Inside the “Compute Hall”

In a recent documentary, a reporter visits Abilene, Texas to witness the construction of a massive new data center, part of the 500-billion-dollar project Stargate announced by Trump in January. A complex the size of New York City’s Central Park will house up to 400,000 GPUs, or graphics processing units, spread across eight buildings. At $10,000 a piece, GPUs are exceptionally efficient in just one type of mathematical operation - multiplying matrices (tables of numbers). GPUs were originally designed for rendering the twists and turns of a 3D computer game, which requires the same mathematical operation, and turned out to be serendipitously perfectly suited for creating AI.

When complete, the site’s giant warehouses will be encased in layers of impenetrable security, lit up with blinding LED lights, and filled with seemingly endless rows of “racks” that look like white refrigerators without doors. A single GPU is about the size and weight of a paperback book. A drawer on the rack opens, eight GPUs click into place, and the drawer closes. With eight drawers you end up with 64 GPUs per rack. They get very hot as they work, and used to be cooled by fans, but the latest cooling technology uses water in an elaborate closed-loop system that carries the heat to the roof. It is these water tubes, rather than wires, that you will see sticking out of the door-less refrigerators. The rows of racks are separated by hallways lined with white square tiles that can be lifted to access the wires underneath. Water cooling is quiet, but built-in fans are still needed on the bottom, generating laundromat-level noise.

https://youtu.be/Jf8EPSBZU7Y?si=zvpJwt4BDh7JsVBQ

Given the expected number of GPUs, the Abilene site will demand as much power as 750,000 homes. And it will have sufficient on-site batteries and generators to enable uninterrupted power in the case of an outage.

Despite its size, the data center will only employ a few hundred people - security, maintenance, engineers to replace faulty equipment. They will not know anything about the AI magic happening inside these racks - that is all controlled remotely from, in the case of OpenAI, sun-filled office spaces in San Francisco.

There, a team of dozens of people is getting the training data ready - a weeks-long first step towards generating a large language model like ChatGPT.

“Online World’s Ugliness”

To train a language model, we need text written by people - the more, the better. Basically, all of it - whatever is free or was licensed by the AI company (lawyers on the team are supposed to check for permissions, but some unlicensed stuff sneaks in).

About 40-60% of this mix is usually “the Common Crawl,” produced by an eponymous nonprofit amassing a collection of text from hundreds of billions of websites since 2007. Its “web crawlers” are constantly visiting websites, billions per month, and copying (“scraping”) whatever they find, whether new or old, to add to the pile.

So we have all websites in the mix. Now we add digital books, making up 5-10% of the mix; wikipedia and scientific journals add another 3-7%. Social media is about 5-8%, half of it surprisingly Reddit. About 5% is programming code - more on this later.

These proportions are very approximate. We don’t actually know exactly what data the latest AI is trained on - the mix is kept confidential to preserve competitive edge. Stanford University’s Center for Research on Foundation Models publishes a Transparency Index. For data, all the main AI companies are in the “red” - very low scores, meaning they reveal very little. Google’s Gemini is the worst offender with the score of 0% - down from 20% last year.

We do know that the resulting mountain of text, or “corpus” in AI lingo, is enormous - trillions of words (“tokens” in AI lingo). Through a process called ingestion, this text is gathered into the same data center where the training will be done, and stored on hard drives across dozens of dedicated racks.

Abeba Birhane is one of a handful of researchers who studies these “gargantuan” datasets. Not surprisingly, she finds high amounts of toxic contents - “rape, pornography, malign stereotypes, racist and ethnic slurs, and other extremely problematic content.” About 5% of text is classified as hate speech; > 10% as pornography; 15% of websites fall into a “sensitive” category.

“There is a growing community of AI researchers that believe that a path to Artificial General Intelligence (AGI) exists via the training of large AI models with “all available data,” warned Brihane and colleagues in 2021. “..This data includes images and text that grossly misrepresent groups such as women, embodies harmful stereotypes, … illegal content, such as images of sexual abuse, rape and non-consensual explicit images. We raise the question, does building AGI … entail feeding models with the online world’s ugliness?”

First… Which Languages?

We have not even started training AI, we are only preparing the text for it to learn from - and we are already running into problems. And before we even think about removing toxic text, we have another issue to address: languages.

After ingestion - collecting all sources of text - the next step in the “data pipeline” is called “preprocessing” - getting it cleaned up. Some text clearly needs to be removed - and this is done automatically - duplicate text (Hamlet probably exists in hundreds of places on the internet); personal information like names, addresses, phone numbers; pieces that are too short or too long, gibberish, etc.

But then we get to languages. A commonly used automated tool called “fastText language ID” is deployed to run through the corpus and mark what language each piece of text is written in. It can detect 176 languages.

And then AI companies sometimes do something that they don’t like to talk about - they only keep a small number of languages that they call “target languages.” Everything else gets deleted. “To train our model, we chose text from the 20 languages with the most speakers, focusing on those with Latin and Cyrillic alphabets,” announced Meta when introducing LLaMa.

“It’s actually more expensive by token [i.e., per word] to train on and generate non-English languages,” said Solaiman.

“If a company has only (relatively) little compute available, it would be sub-optimal to train their model on ALL languages. They have to select a subset of languages that they want their model to work on,” Florian Mai, an AI safety researcher at the University of Bonn, told me.

Given the multi-billion-dollar investments required, the AI companies, most of them American, single-handedly (and quietly) make these decisions. “The majority of the research community has been excluded from the development of LLMs. This exclusion has had concrete consequences; for example, most LLMs are primarily trained on English-language text,” observed the Hugging Face team.

“I learned my heritage language of Bangla as an adult,” says Solaiman. “I did the first non-Latin character testing on OpenAI and GPT systems. I did it in Bangla because it was the only other language that I knew that didn’t use Latin characters.”

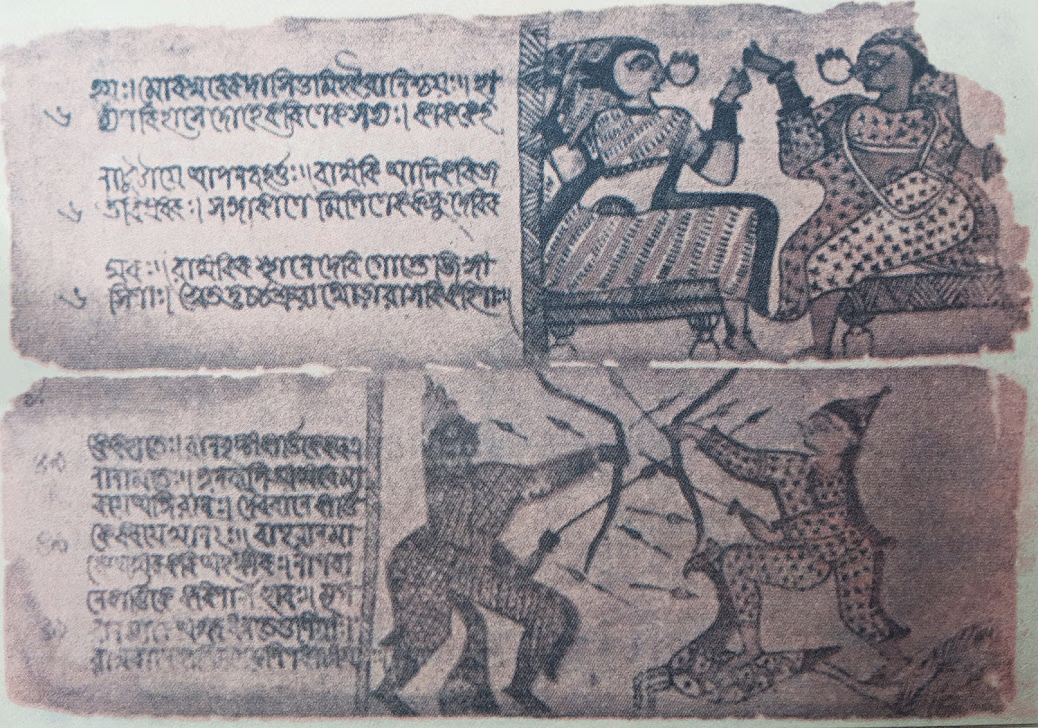

Bangla (also known as Bengali) is spoken by over 200 million people, making it the sixth most common language in the world. It is the state language in Bangladesh and one of state languages in India. Some of the earliest Bangla writings, mystic poems full of symbolism and offering a glimpse into ancient Bengali culture, date back over 1000 years.

https://www.thedailystar.net/in-focus/news/the-unexplored-treasures-old-bengali-manuscripts-1706662

Yet, from the perspective of AI, Bangla is one of thousands of “low-resource languages,” i.e. those with much less available digital text compared to English. These languages are struggling - they “face the dual threat of underrepresentation in digital ecosystems and extinction in the real world.” Deleting these languages during preprocessing only compounds the problem.

Because of the lack of transparency by AI companies, we don’t know which languages are “on-target” and which are deleted in each model. Some proudly advertise their “multilanguage capabilities.” Google’s Gemini is now up to 250 languages - that’s progress, but still a long way from the more than 7000 spoken in the world today. And how good are these multilanguage capabilities?

In 2023, Bangla became the first low-resource language with its own AI model, thanks to work by a team from Bangladesh University of Engineering and Technology (BUET) and University of California, Los Angeles. Even though it was trained on 1000 times less text, the Bangla-only AI was able to beat the big-name, mostly-English commercial AIs such as LLaMA-3 and GPT4 in generating better Bangla text.

Why not just generate in English and then translate? Not a good solution: “Texts produced through translation are prone to information loss, unnatural expressions, and stylistic differences compared to texts written by native speakers—phenomena referred to as “translationese”

And of course, it’s not just AI’s language ability that is impacted when a whole language is deleted, it’s also the knowledge that is contained in that deleted text, contributing to cultural bias towards English-speaking countries. Says Aliya Bhatia at the Center for Democracy and Technology: “If these models are to serve as the foundation for automated systems that make life-altering decisions, around immigration for example, these models may have an outsized negative impact on individuals’ lives and safety.”

Sanmi Koyejo at Stanford’s Institute for Human-Centered AI offers a warning: “We anticipate these gaps will get bigger.” “‘Low resourcedness’ is not solely a data problem but a phenomenon rooted in societal problems such as non-diverse, exclusionary, and even exploitative AI research practices,” according to a recent report co-authored by Koyejo.

As AI is adopted in education, business and government, people speaking thousands of “non-target languages” may face a stark choice: either give up AI, or give up their language.

Mai paints a more optimistic picture: “The frontier AI labs struggle to find enough data to support further up-scaling the pretraining compute for their models. That means they have exhausted all the options! If there were unused data from thousands of low-resource languages that they could include in their training, they would do that.”

How did OpenAI Leapfrog Google, and What is a “Model”?

In June 2012, a mysterious article appeared in the New York Times. “MOUNTAIN VIEW, Calif. — Inside Google’s secretive X laboratory, known for inventing self-driving cars and augmented reality glasses, a small group of researchers began working several years ago on a simulation of the human brain.” The article referred a couple of times to “Google’s brain” and “Google brain.” After a while, the name caught on, and the team started calling itself “Google Brain.”

This was the time when “AI Winter” had just ended, thanks in part to Alex Krizhevsky, a student in Geoff Hinton’s lab at the University of Toronto. He ran his personal computer with two GPUs at his house for a whole straight week to train a new AI tool called AlexNet - not the first, but the most famous, sign of AI Spring. The idea was very simple - a layered network similar to a human brain. Let’s say we have an image, 100 x 100 = 10,000 pixels. Each pixel can be black, white, grey, red, etc. That’s the first “floor.” On the second floor are nodes - they are like neurons. Each one has tentacles “looking” at a few pixels, and calculating one signal - an impression. They pass what they see upstairs. “I see mostly black,” says one looking at a corner. “I see a lot of white,” says another looking at the center.

On the next “floor”, the nodes are connected through their tentacles to the nodes from the previous floor, like team leaders. AlexNet had 8 layers and 650,000 nodes.

When they start, like George Costanza in the Seinfeld episode called The Penske File, the detectors don’t know what they are supposed to look for. But Krizhevsky and another student, Ilya Sutskever, had a specific goal. They were preparing for the annual competition for the best software program to recognize objects on photos. The rules were as follows. Look at 150,000 photos and describe in words what is shown on each.

Thats where the “intelligence” comes in. Nodes are very smart. Like team leaders playing favorites, they don’t listen to each member equally. A note may have 10 nodes below them. How much it listens to each one is determined by a “parameter” of a “tentacle” - a connection between the nodes. (Think of the parameter as how much your boss listens to you. If you feel like the boss always listens to Bob, and always ignores you, that means your parameter is less than Bob’s). And because each node has multiple “bosses” (connected to multiple nodes upstairs), there are a lot more connections than nodes - in the case of AlexNet, 60 million.

In advance of the competition, Krizhevsky and Sutskever trained AlexNet by showing it over 1 million images that had known descriptions - “bicycle,” “apple,” etc. The first run-through, confusion reigns among the nodes. “I see a bunch of black over here,” one might say. But with each image, AlexNet adjusts the parameters to try to get better at guessing, using a method developed by Hinton called “back-propagation”. Eventually, nodes on the higher floor start to recognize patterns. “I see a circle,” or “I see a circle inside a circle,” or “I see an eye,” or “I see a cat.”

Finally, when the training is complete, AlexNet can look at any image and say what’s in it.

Just to pause here for a second. What is AlexNet - is it a piece of software? A bunch of nodes and parameters?

When you download a piece of software like Microsoft Office, you are getting software code. So when you press the “A” key, the software executes the instruction, “Draw A on the screen.”

Suppose you want to buy AlexNet to run on your computer - what would you be getting? It’s also a software where you can point it to an image, and it will tell you what’s on it. But instead of specific instructions like in Office, the software has a proprietary, uniquely arranged neural network, in this case built by Krizhevsky and Sutskever, and parameters that were refined during training and now are frozen.

This - the layers, the nodes, the connections and the parameters - is mysteriously called The Model.

And The Model is what entered the competition. As you probably know or already guessed, AlexNet won, showing the power of neural networks and ushering AI Spring. Geoff Hinton and Ilya Sutskever were soon recruited to Google Brain.

There, instead of one computer, the team had thousands. Soon, their attention turned to language. Can the same neural network approach be used to understand, translate and generate text?

Yes, it turned out, but with a twist. Like the roll of telegraph tape (and unlike a photo), text has a direction. But that doesn’t mean that the last word is the most important. In fact, it may not matter exactly where the word is. I may say, to quote Seinfeld again, “These pretzels are making me thirsty!” Or, “I’m so thirsty from these pretzels!” How do we get AI to focus its attention on the word “pretzels” no matter where it’s located?

In 2017, the team at Google Brain (building on earlier work in 2014) solved this problem in a paper that became a watershed moment in AI, and one of the most cited research papers of all time. It had an unusual title: “Attention Is All You Need.”

Soon after, one of the paper’s lead authors, Noam Shazeer, presented early experiments with this method they called “transformers” (that would become the “T” in Chat-GPT). His talk was called “Neural Language Models: Bigger is Better.” In the video, his grey shirt blends into the greyness of the auditorium, and he speaks tentatively, his black Yarmulka fitting neatly on his close-cropped hair. Nothing to indicate that his paper and this talk would change history forever, and could literally end our civilization.

Shazeer describes a simple experiment - asking AI to write a Wikipedia article about Abraham Lincoln after it was trained on the rest of Wikipedia (minus that article). With a smaller model of 33 million parameters, the article makes little sense: “Abraham Lincoln was an American voodoo activist… Over the course of his life, Lincoln lived. ”

With a bigger model of 97 million parameters, it’s a little more coherent at first glance but “no bearing on reality” as Shazeer says, e.g. “Lincoln was an American Prime Minister who was an early proponent of an anti-witchcraft situation.”

With 320 million parameters it’s better, and with 5 billion it looks like any other Wikipedia page.

What happened next became one of the most fascinating twists in Silicon Valley history. Forty miles north of Mountain View, a startup called OpenAI was caught offguard by the 2017 paper and jumped into action.

OpenAI was conceived a few years earlier by Elon Musk as an alternative to Google. “These Google guys have no intention of focusing on AI safety…” he complained in 2014, as Walter Isaacson describes in Musk’s biography. “The danger comes when AI is decoupled from human will.” Musk partnered with a software entrepreneur and president of Y Combinator, a well-known startup community, Sam Altman. Over a dinner in Palo Alto, they decided to start OpenAI. Sutskever left Google Brain to become a co-founder with them.

None of the founders had any AI safety experience per se. The first recruit who did was Dario Amodei. In 2016, just before moving from Google Brain to OpenAI, Amodei wrote a seminal paper called Concrete Problems in AI Safety. He joked recently that this paper was his attempt to “procrastinate from whatever other project he was working on at the time,” but it’s only a half-joke - AI safety was not a field at that point, and this paper became the first of its kind. To illustrate the problems, since there were no language models yet, Amodei and his co-authors use a cleaning robot as an example. Imagine you go into a store and buy a cleaning robot that can use common tools like brooms, wipes, etc. “Clean up the mess,” you will say. What could go wrong?

Amodei and his co-authors classified possible problems into several types (paraphrased below) - note how they are eerily similar to the issues a teacher may face with students:

- Negative side effects: e.g. knocking over a vase.

- Reward Hacking: “hacking” in this case means “finding a clever shortcut” to get the reward without doing the work, such as sweeping the mess literally under the rug (more on this later).

- Having to be micromanaged - e.g. a cell phone lying on the floor is not trash!

- Unsafe exploration - washing an electrical outlet with a mop.

- Inability to adapt - failing to adjust from the living room to the garage.

By the time Amodei joined OpenAI, AI safety was his passion. He started working on solutions to these problems through a technique called reinforcement learning - again, very similar to what a teacher may do, or a parent. “No, do not do this.” “Yes, you can do this.”

In 2017, he recruited one of his co-authors on the safety paper, Paul Christiano, from UC Berkeley. In a fascinating, 3-hour podcast, Christiano reflected on the dangers of AI and the concept of “alignment” (which was not mentioned in Amodei’s paper): “the problem of ensuring that powerful AI systems pursue the intended goals of their designers.”

Then, in June 2017, Google Brain published the Attention/Transformers paper, in a total surprise to OpenAI. Fast forward to November 2022 when OpenAI’s ChatGPT became the first widely used large language model in history.

Two questions here: one, why did it take OpenAI five long years, when Shazeer already demonstrated great progress with Abraham Lincoln’s article in early 2018?

And two, why didn’t Google do it first?

Let’s compare: in 2017, OpenAI was a 501(c)3 nonprofit, with the initial seed capital of 1 billion dollars and a few dozen employees. Google in 2017 was worth 730 billion dollars, and had over 80,000 employees. Plus, they invented transformers first.

Several explanations have been proposed. Maybe Google did not see the potential. “When the transformer paper came out, I don’t think anyone at Google realized what it meant,” said Altman. “Shazeer proposed to Google executives that the company abandon the entire search index and train a huge network with transformers—basically to transform how Google organizes information,” reported Wired. The answer was No. Maybe they were becoming too bureaucratic. Or, as their CEO told Wired, they “found it advantageous to let others lead.”

Well, here is another, previously unreported, explanation for both questions: toxicity.

It took OpenAI five years to overcome this unexpected and pernicious challenge. GPT1 was trained only on books, so not much toxicity there. GPT2 was also trained on limited data (Reddit), its release was delayed to monitor for toxicity, and still it was too toxic for broad use(Gehman et al. 2020). So was GPT3: “it often spews biased and toxic language,” noted the New York Times. Only in GPT 3.5, which became ChatGPT, was OpenAI finally able to control the toxicity issue. It was released to the public through a free interface in November 2022.

The difference was striking. This time, The New York Times was impressed: “OpenAI has taken commendable steps to avoid the kinds of racist, sexist and offensive outputs that have plagued other chatbots… Since its training data includes billions of examples of human opinion, representing every conceivable view, it’s also, in some sense, a moderate by design…There are also plenty of things ChatGPT won’t do, as a matter of principle. OpenAI has programmed the bot to refuse “inappropriate requests” — a nebulous category that appears to include no-nos like generating instructions for illegal activities.”

The New York Times’ observations were correct, but the reasons were wrong. It was not the “billions of examples of human opinion” that made ChatGPT a “moderate,” and it was not “programmed” to refuse inappropriate requests. This was all due to a revolutionary technique OpenAI introduced without fanfare a few months before: RLHF - Reinforcement Learning with Human Feedback - painstaking training by thousands of people.

"I do think ChatGPT would not have been successful without RLHF, or at least some kind of significant post-training effort," according to Jacob Hilton, President of the nonprofit Alignment Research Center.

With toxicity under control, ChatGPT became a global sensation. Within 2 months, it had over 100 million users.

Google was so surprised that they declared “code red,” bringing in Larry Page and Sergey Brin who had retired, and spending 2.7 billion dollars to bring back Shazeer who had left to start his own company.

Perhaps Google had noted the toxicity as well? And watching OpenAI struggle, thought there would never be a solution? Only to be surprised that OpenAI, having a stronger AI safety team than Google - Amodei, Christiano, Solaiman, Agarwal and others - was able to control it despite all odds?

“No One Tries to Comfort Her:” The Early Days of Contents Moderation

The war on internet’s toxic contents had a shaky start. The story goes back to UseNet - the original chatroom community started by three students in 1980s, that was not actually even internet but a loose network of university servers. UseNet gave us terms like “FAQ” and “spam.” It started small, with a cadre of old-timers joined each September by new college students arrived on college campuses and getting access to UseNet. They were quickly educated by the old-timers on the “Netiquette.” But in 1993, AOL offered its hundreds of thousands of users free UseNet access, in what came to be known as “Eternal September.” The communities were flooded, and Netiquette was lost. “Usenet started to become a way for pirates and pornographers to distribute massive quantities of binary files in a decentralized, untraceable manner,” according to PC Magazine. There were “many newsgroups with names like alt.binaries.pictures.erotica.children” - 88 groups containing child pornography, one investigation found. In 1995, TIME Magazine ran a cover story called “Cyberporn.” In 1996, Congress passed the “Communications Decency Act (CDA), that made it a crime to share indecent material with a minor. Bill Clinton signed it into law.

What happened next may be shocking by today’s standards. The TIME piece was criticized because it got the percentages wrong. Hundreds of websites went black in protest, under the banner of free speech. Multiple organizations, including the ACLU, appealed, and the CDA was ultimately overturned by the Supreme Court in 1997. Even the child pornography-focused chat rooms were dismissed in a recent book as simply “tasteless jokes.”

The wake-up call came soon after - the 1999 Columbine shooting. The public outcry that followed drew attention to harmful internet contents.

By the time Mark Zuckerberg started Facebook in 2004, it was clear: contents moderation was required, not for legal reasons, but to protect the company’s reputation. Initially moderation was done in-house.

But, “Facebook employees who policed content were soon overwhelmed by the volume of work…Executives pushed the team to find automated solutions for combing through the content… Facebook also began looking at outsourcing,” reported the New York Times - contracted with vendors such as Cognizant and Accenture who “for eye-watering fees have let Facebook hold the core human problem of its business at arm’s length.”

By 2018, Facebook was employing 15,000 contents moderators to monitor posts by its 2.3 billion users. Others - youtube, reddit - followed suit. A new industry was born. The jobs were attractive to unsuspecting applicants, with minimal requirements. “They would just hire anybody,” a mental health counselor was quoted by the New York Times.

One of the moderators was Chloe. Her story was broken in February 2019 by Casey Newton, a technology journalist.

“The panic attacks started after Chloe watched a man die. She spent the past three and a half weeks in training, trying to harden herself against the daily onslaught of disturbing posts: the hate speech, the violent attacks, the graphic pornography. In a few more days, she will become a full-time Facebook content moderator, or what the company she works for, a professional services vendor named Cognizant, opaquely calls a “process executive.”

For this portion of her education, Chloe will have to moderate a Facebook post in front of her fellow trainees.... She presses play. The video depicts a man being murdered… Chloe’s job is to tell the room whether this post should be removed. She knows that section 13 of the Facebook community standards prohibits videos that depict the murder of one or more people. When Chloe explains this to the class, she hears her voice shaking. Returning to her seat, Chloe feels an overpowering urge to sob… She leaves the room, and begins to cry so hard that she has trouble breathing. No one tries to comfort her. This is the job she was hired to do. And for the 1,000 people like Chloe moderating content for Facebook at the Phoenix site, and for 15,000 content reviewers around the world, today is just another day at the office…”

Such conditions often lead to “lasting psychological and emotional distress.” Reviewing 500-700 posts a day, the workers “ receive a performance score…. If they make mistakes more than 5 percent of the time, they can be fired,” workers told the New York Times. “But Facebook’s rules about what was acceptable changed constantly, causing confusion. When people used a gas-station emoji as slang for selling marijuana, workers deleted the posts for violating the company’s content policy on drugs. Facebook then told moderators not to remove the posts, before later reversing course.”

What about the other part of the plan - automation? Besides the work conditions, manual moderation becomes too expensive, according to Mike Schroepfer, Facebook’s CTO, in 2018. “To me AI is the best tool to implement the policy—I actually don't know what the alternative is,” he said.

Just in the first 3 months of 2018 - the first year Facebook published its moderation report - the numbers of removed pieces of content were as follows:

- Pornography: 21 million; 96% detected by AI

- Violence: 3.5 million; 86% detected by AI

- Hate speech, 2.5 million; 38% flagged by AI

How does AI detect toxicity? The same way as AlexNet - it needs first to be trained on millions of images and text labelled by people as “toxic” vs “not toxic.” Then it is ready to classify new images or text. Hence this method is called an “AI Classifier.”

There is a problem, however: AI doesn’t work well enough to replace humans. Why was is so hard, despite two AI teams working on the challenge, including a top AI researcher, Yan LeCun?

“...Technology like artificial intelligence, while promising, is still years away from being effective for most bad content because context is so important. For example, artificial intelligence isn’t good enough yet to determine whether someone is pushing hate or describing something that happened to them so they can raise awareness of the issue,” says Facebook.

As WIRED wrote in late 2018,

“To understand whether a post reading “I’m going to beat you” is a threat or a friendly joke, a human reviewer might effortlessly take into account whether it was paired with an image of a neighborhood basketball court, or the phrasing and tone of earlier messages…

… One big challenge for these projects is that today’s machine learning algorithms must be trained with narrow, specific data. This summer, Facebook changed how some of its human moderators work, in part to generate more useful training data on hate speech. Instead of using their knowledge of Facebook’s rules to decide whether to delete a post flagged for hate speech, workers answered a series of narrower questions. Did the post use a slur? Does it make reference to a protected category? Was that category attacked in this post? A reviewer could then scan through all the answers to make the final call. The responses are also useful feedstock for training algorithms to spot slurs or other things for themselves. “That granular labeling gets us really exciting raw training data to build out classifiers,” says Aashin Gautam, who leads a team that develops content moderation processes. Facebook is exploring making this new model permanent, initially for hate speech, and then perhaps for other categories of prohibited content.”

And again, there are so many languages. Unlike the developers of language models, who can just decide to focus on “target languages” and delete everything else, social media knows no boundaries.

“The project also helps illustrate the scale of Facebook’s challenge. So far, its multilingual workarounds don’t work on languages for which the company has relatively small datasets, such as Burmese. The same challenge exists for Hausa, a West African language used in campaigns of anti-Muslim hate speech that local police told the BBC last month have led to more than a dozen murders. Facebook says it is expanding its relationship with Nigerian fact checking organizations and NGOs—as well as its use of machine learning to flag hate speech and violent images.”

WIRED quotes Facebook’s Chief Technology Officer, Mike Schroepfer: “My hope is that it in two or three or five years there is so little of it on the site that it’s sort of ridiculous to argue that’s it having a big effect on the world.”

We all know how this prediction turned out. In November 2020, Facebook’s content moderators wrote a letter to company leadership.

“Without informing the public, Facebook undertook a massive live experiment in heavily automated content moderation. Management told moderators that we should no longer see certain varieties of toxic content coming up in the review tool from which we work— such as graphic violence or child abuse, for example. The AI wasn’t up to the job. Important speech got swept into the maw of the Facebook filter—and risky content, like self-harm, stayed up. The lesson is clear. Facebook’s algorithms are years away from achieving the necessary level of sophistication to moderate content automatically. They may never get there.”

The plight of contents moderators continues - ordinary folk with no special professional training, lured by promises of working in the big tech and then thrown into the toxic cesspool. When a vendor such as Cognizant or Accenture gets exposed as working for Facebook, it publicly cuts the contract. But the lure of enormous profits invariably leads to more deals, and there is plenty of demand - Youtube and others.

Facebook’s recent elimination of contents moderation of misinformation to appease Trump does not mean there is no need for moderators. “I saw hundreds of beheadings,” says one contents moderator in a striking documentary by CNBC. His job is safe.

The number of contents moderators continued to grow, reaching hundreds of thousands with multi-billion-dollar annual budgets, just for Facebook alone.

But in the last few years, an even bigger army of data workers emerged - not for contents moderation, but for teaching language models - the subject of our story. Their numbers are in the millions, and the massive industry, led by new companies such as Scale AI and Sama, has a new euphemistic name, “data enrichment.”

In a recent panel discussion organized by Partnership for AI, an industry-sponsored group, the topic was - “Human Rights Due Diligence Frameworks & Ethical Data Enrichment Supply Chains” - basically, what companies should do to protect data workers.

“There are broad categories where we found risks existing,” says …, “And you will read these in the white paper PAI has put out. There are risks that are pretty analogous to the direct supply chain (factories, hardware, raw materials) - lower wages, lack of benefits, excessive working hours. The other bucket are risks similar to those you might find in content moderation: the online aspects, such as exposure to traumatic or graphic material. For example, if you are doing red teaming - asking people to break AI prompts by doing pretty intense things - asking AI to do graphic things or show graphic things - that can be a lot… Both areas have research and tools to protect the workers, but it’s complicated. For example, in a factory you could put worker’s rights on the wall. How do you do that for a virtual workforce, or someone doing this for a limited time? And who is keeping track of this inside the tech company - is it the supply chain department? But they do mostly hardware. Or is it the Responsible AI team? But their remit is mostly the “final product,” not how it’s made.

Some other notes I took during the panel demonstrating the abysmal state of the “data enrichment” industry today:

- “Uncertainty how to move forward”

- “Could use improvement”

- “Even within one company everyone is doing something different”

- “Everyone has a different risk appetite”

- “Process isn’t there”

- “Not incentivised to do the right thing”

The date was April 30, 2025. The video was viewed only 104 times.

If not contents moderation, what do these millions of data workers do exactly? And why are they managed not by traditional generalist outsourcing vendors that span all sectors, like Cognizant and Accenture, but by the new generation of specialized companies like Scale AI and Sama?

We will answer this question once we train our model. Hint: many of them are experts - doctors, lawyers, scientists, they speak many languages, and the job they have - teaching AI - is even more nuanced and impactful than contents moderation.

But first, let’s finish preparing our corpus.